Even LINQ can be used to query HTML, but for this example and for ease of use, the Agility Pack is preferred and what we will use.īefore you parse the HTML, you need to know a little bit about the structure of the page so that you know what to use as markers for your parsing to extract only what you want and not every link on the page. HTML Agility Pack is a common tool, but you may have your own preference. With the HTML retrieved, it’s time to parse it. You can see that HTML was returned, which means that an error did not occur. If you click “HTML Visualizer” from the context menu, you can see a raw HTML view of the results, but you can see a quick preview by just hovering your mouse over the variable. Visual Studio will stop at the breakpoint, and now you can view the results.

#Webscraper chrome code#

You can test the above code by clicking the “Run” button in the Visual Studio menu: This will ensure that you can use the Visual Studio debugger UI to view the results. Make sure you set a breakpoint in the Index() method at the following line: `return View() ` We still haven’t parsed it yet, but now is a good time to run the code to ensure that the Wikipedia HTML is returned instead of any errors. The code to make the HTTP request is done. The HomeController Index() method is the default call when you first open an MVC web application.Īdd the following code to the Index() method in the HomeController file: Because we already know the page that we want to scrape, a simple URL variable can be added to the HomeController’s Index() method.

Before you make the request, you need to build the URL and store it in a variable. NET, so no additional libraries are needed for basic requests. NET Core introduced asynchronous HTTP request libraries to the framework. In more complex projects, you can crawl pages using the links found on a top category page. This is just one simple example of what you can do with web scraping, but the general concept is to find a site that has the information you need, use C# to scrape the content, and store it for later use.

You can scrape this list and add it to a CSV file (or Excel spreadsheet) to save for future review and use. Wikipedia has a page with a list of famous programmers with links to each profile page. Imagine that you have a scraping project where you need to scrape Wikipedia for information on famous programmers. Making an HTTP Request to a Web Page in C# This package makes it easy to parse the downloaded HTML and find tags and information that you want to save.įinally, before you get started with coding the scraper, you need the following libraries added to the codebase:

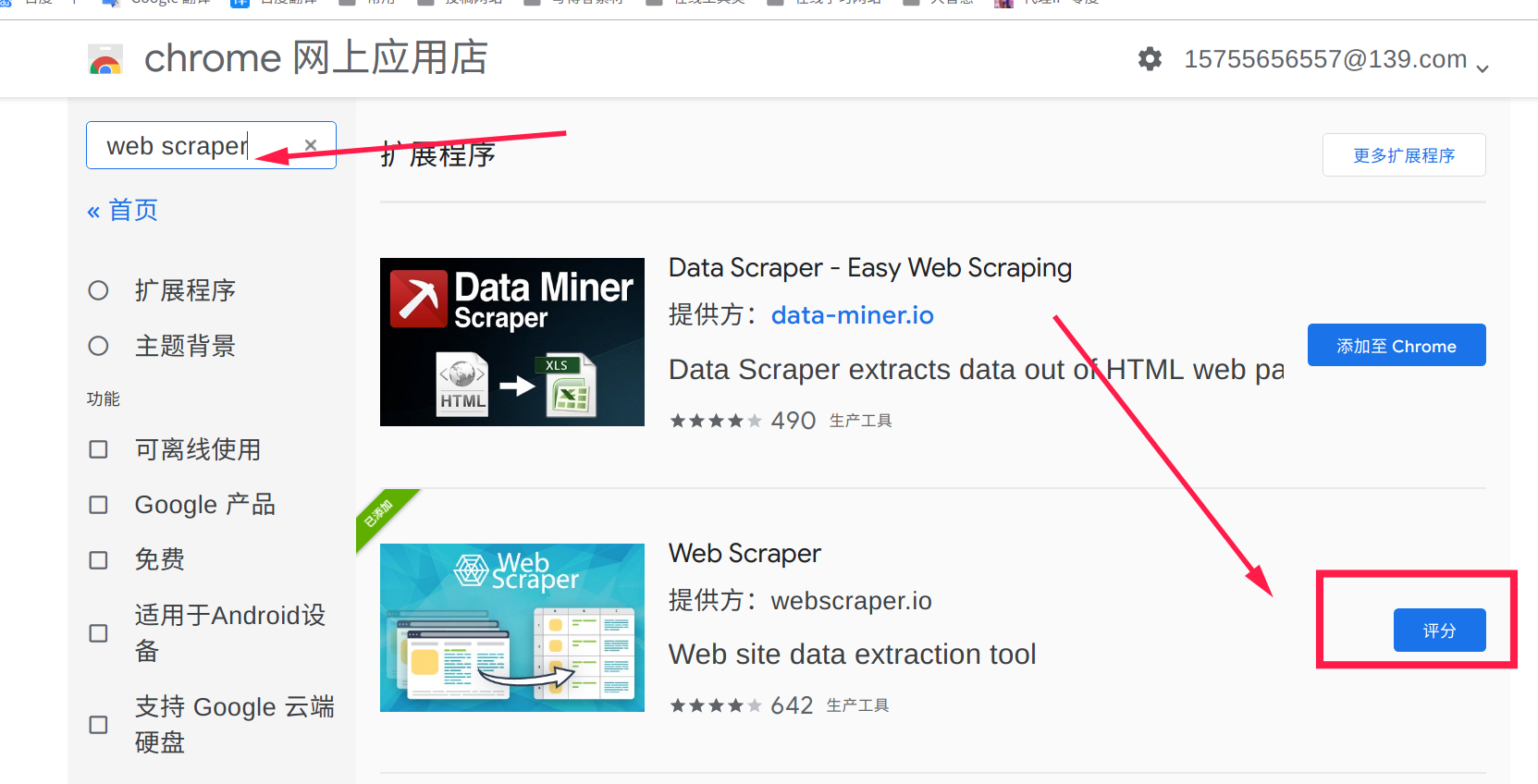

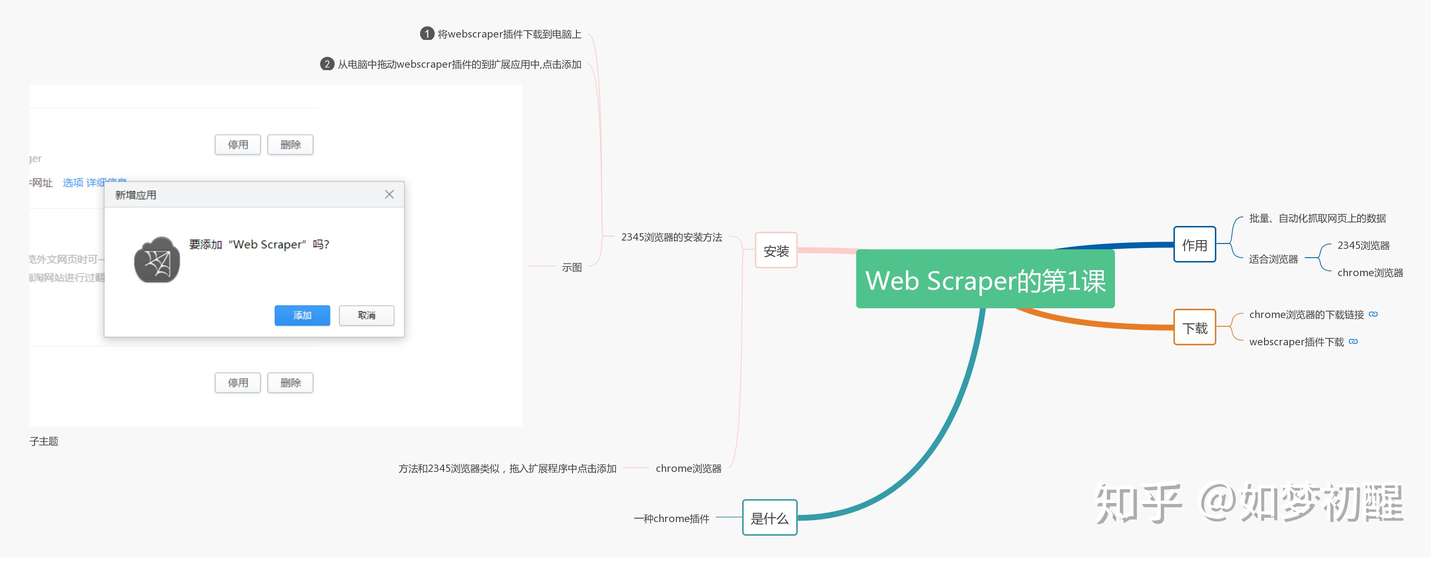

#Webscraper chrome install#

Install the package, and then you’re ready to go. In NuGet, click the “Browse” tab and then type “ HTML Agility Pack” to find the dependency. After you create a new project, go to the NuGet Package Manager where you can add the necessary libraries used throughout this tutorial. NET Core Web Application project using MVC (Model View Controller). If you’re using C# as a language, you probably already use Visual Studio. NET Core 3.1 framework and the HTML Agility Pack for parsing raw HTML. NET libraries are available to make integration of Headless Chrome easier for developers. The PuppeteerSharp and Selenium WebDriver. Note: This article assumes that the reader is familiar with C# syntax and HTTP request libraries. We’ll also cover scraping these pages using PuppeteerSharp, Selenium WebDriver, and Headless Chrome. This method is common with basic scraping, but you will sometimes come across single-page web applications built in JavaScript such as Node.js, which require a different approach. In this article, we will cover scraping with C# using an HTTP request, parsing the results, and then extracting the information that you want to save. C# is still a popular backend programming language, and you might find yourself in need of it for scraping a web page (or multiple pages).

0 kommentar(er)

0 kommentar(er)